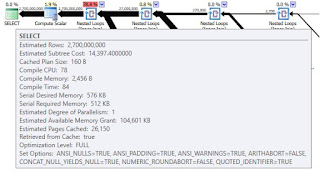

A few weeks ago I shared a query for probing the execution plan for the Estimated Subtree Cost value.

A few weeks ago I shared a query for probing the execution plan for the Estimated Subtree Cost value.That query was rather simple but it got the job done. In that post I also explained how, although there are several ways of finding missing index suggestions, they were not always very helpful. After posting I was thinking that it would be helpful to combine the two, or at least display the results so that it is easy to determine which execution plans include missing index warnings.